中文

中文CyberEngine

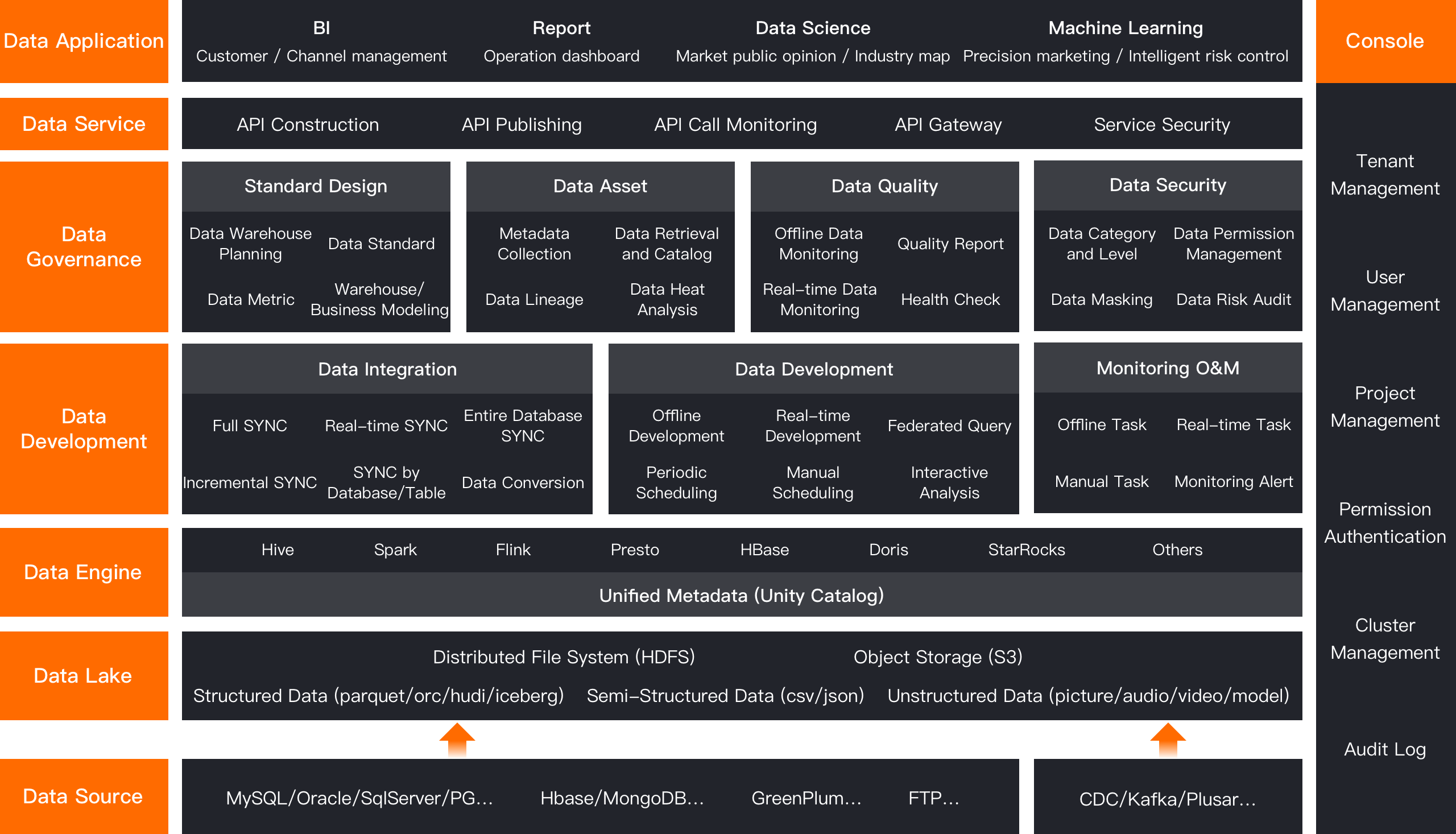

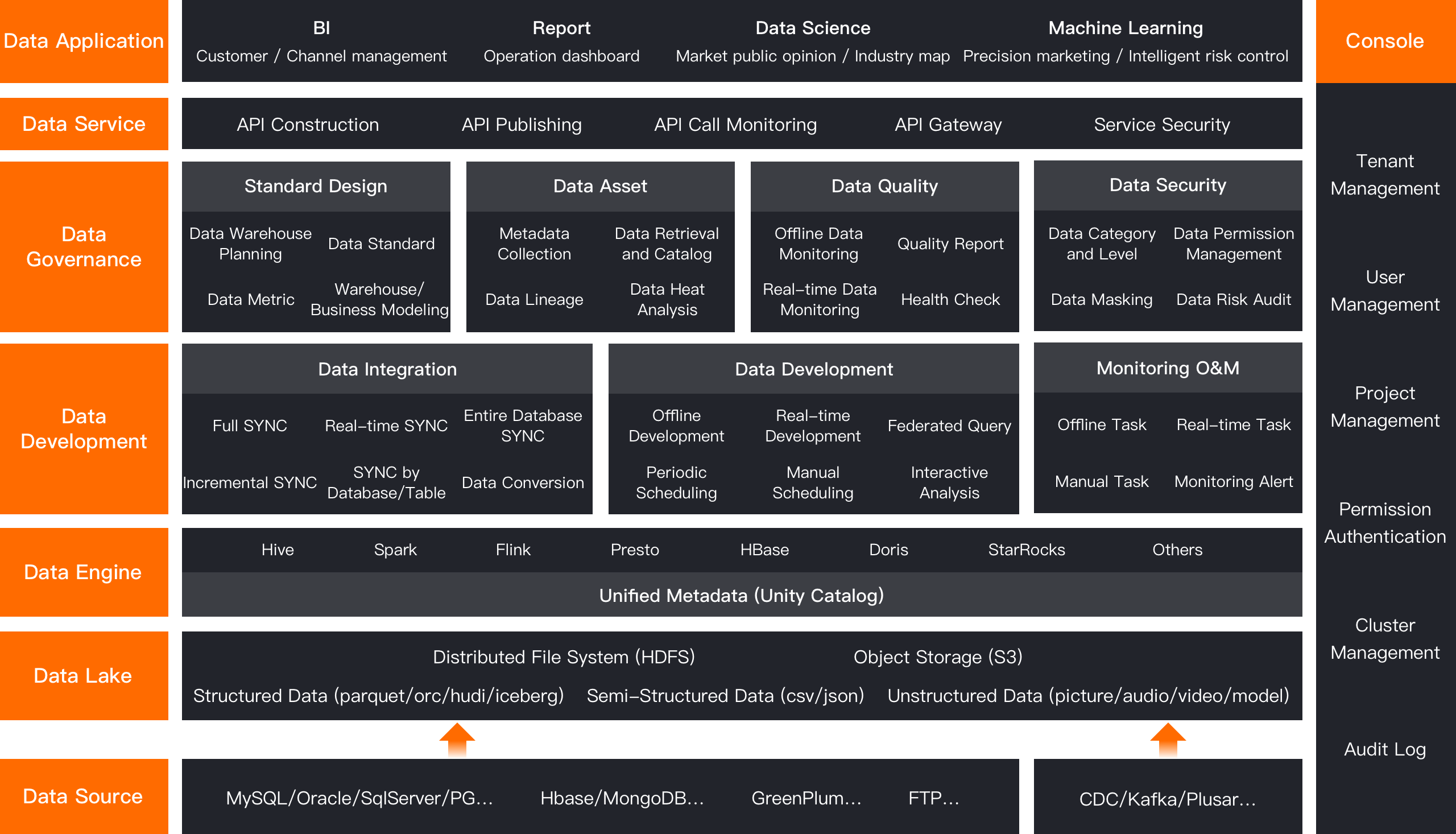

With the surge in data volume, managing big data has become increasingly challenging for enterprises, limiting the development of data applications. CyberEngine offers a one-stop big data infrastructure management platform that integrates and optimizes big data, data lakes, analytics, and AI components. This enhances the stability and performance of data infrastructure, helping enterprises to unlock data value and boost business competitiveness.

Based on a web-based interface, it allows zero-coding deployment and maintenance of big data clusters. It supports one-click deployment and configuration for various scenarios in data warehouses and data lake platforms.

Adopting cloud-native technology, it supports architectures such as storage-compute separation, stream-batch integration and Lakehouse. This enhances resource utilization, improves system reliability and reduces management complexity.

Implement secure management of big data clusters based on Kerberos + OpenLDAP + Ranger, and isolate user resource based on multi-tenancy.

Fully integrate multiple big data components of Hadoop system and non-Hadoop systems, to meet the needs of various big data platform scenarios and provide solutions for different scenarios.

Adhering to the "Open source, giving back to the community" concept, the big data components and deployment management platform deeply optimized by the product are all open source, and easy for developers to access and integrate.

Provide comprehensive big data cluster management and monitoring capabilities, helping users to monitor the health status, resource utilization, and task execution in real-time. This enhances operational efficiency and cluster stability.

Provide comprehensive big data cluster management and monitoring capabilities, helping users to monitor the health status, resource utilization, and task execution in real-time. This enhances operational efficiency and cluster stability.

Fully integrate mainstream big data components, including Hadoop, Spark, Flink, Kafka, and Hudi. It enables the rapid deployment of distributed big data clusters and provides rich component management features such as version control, configuration management, and log querying, reducing operational costs and complexity.

Fully integrate mainstream big data components, including Hadoop, Spark, Flink, Kafka, and Hudi. It enables the rapid deployment of distributed big data clusters and provides rich component management features such as version control, configuration management, and log querying, reducing operational costs and complexity.

Adopt cloud-native and containerization technologies to support high scalability and fault tolerance. It provides cluster elastic scaling capabilities, enabling rapid response to business demands and fault handling.

Adopt cloud-native and containerization technologies to support high scalability and fault tolerance. It provides cluster elastic scaling capabilities, enabling rapid response to business demands and fault handling.

Support a storage-compute separation architecture, addressing the issue of coupled storage and compute resources. This improves compute resource utilization and system stability while effectively reducing storage costs.

Support a storage-compute separation architecture, addressing the issue of coupled storage and compute resources. This improves compute resource utilization and system stability while effectively reducing storage costs.

Support a unified stream and batch processing model, enabling seamless switching between real-time data processing and batch processing. This allows users to more conveniently handle different types of data.

Support a unified stream and batch processing model, enabling seamless switching between real-time data processing and batch processing. This allows users to more conveniently handle different types of data.

Provide comprehensive big data cluster management and monitoring capabilities, helping users to monitor the health status, resource utilization, and task execution in real-time. This enhances operational efficiency and cluster stability.

Fully integrate mainstream big data components, including Hadoop, Spark, Flink, Kafka, and Hudi. It enables the rapid deployment of distributed big data clusters and provides rich component management features such as version control, configuration management, and log querying, reducing operational costs and complexity.

Adopt cloud-native and containerization technologies to support high scalability and fault tolerance. It provides cluster elastic scaling capabilities, enabling rapid response to business demands and fault handling.

Support a storage-compute separation architecture, addressing the issue of coupled storage and compute resources. This improves compute resource utilization and system stability while effectively reducing storage costs.

Support a unified stream and batch processing model, enabling seamless switching between real-time data processing and batch processing. This allows users to more conveniently handle different types of data.

CyberEngine helps users build a big data lakehouse and supports the rapid ingestion and processing of large datasets. It supports mainstream big data components such as HDFS, Hive, Flink, Spark, Hudi, and Iceberg, meeting users' needs for efficient, stable, and scalable big data storage, querying, and analysis. Users can quickly ingest and manage data through CyberEngine, providing enterprises with efficient data storage, querying, and analytical capabilities.

WeChat Official Account

WeChat Tech Account

Douyin Account

WeChat Group Chat

WeChat Official Account

WeChat Tech Account

Douyin Account

WeChat Group Chat

Scan to contact your dedicated support